The Rise of Autonomous Agents in 2025 explores practical patterns, platforms, and governance conside...

Modernizing Legacy Systems Without Halting Operations

Downtime during legacy system modernization can cripple a business. This guide provides a practical, end-to-end playbook for modernizing legacy systems without stopping operations—using patterns like the Strangler Fig, blue-green deployments, event-driven architecture, and careful data migration.

Introduction

Legacy systems are often the hidden bottleneck of digital progress. They harbor years of business logic, fragile data migrations, and fragile integrations that can make a simple upgrade feel like a full-blown outage. Yet modernizing these systems is essential to stay competitive, improve security, and unlock new capabilities like AI-driven insights and seamless customer experiences. The challenge is clear: modernize without stopping business operations. This guide provides a practical, field-tested playbook to modernize legacy systems with zero or minimal downtime. It draws on patterns like the Strangler Fig, blue-green deployments, event-driven architectures, and careful data migration. The goal is to deliver continuous value in weeks, not years, while maintaining rigorous risk controls and measurable outcomes.

Why zero-downtime modernization matters

Downtime costs revenue, erodes customer trust, and increases operational risk. For many organizations, a full rewrite is tempting but impractical due to scope, data gravity, and regulatory constraints. A disciplined, incremental approach reduces risk, preserves business continuity, and yields tangible improvements early—while laying the groundwork for a modern, scalable architecture.

A practical playbook for modernizing without stopping operations

Below is a structured approach you can adapt to your context. The stages are designed to be iterative, allowing you to learn, adjust, and deliver value in short cycles.

Phase 1 — Assess and map the current state

- You shall map criticality: identify systems, modules, data stores, and integrations that would cause the most impact if they failed or were unavailable.

- Baseline performance and reliability: collect current response times, error rates, and MTTR (mean time to recovery). Establish acceptable downtime targets per component and per business process.

- Data gravity and ownership: locate the data sources, owners, and data quality issues. Decide where data resides during migration and how consistency will be maintained.

- Regulatory and security constraints: inventory compliance requirements, audit trails, and access controls that must be preserved during modernization.

Deliverables for Phase 1: an authoritative map of dependencies, a prioritized backlog of modernization candidates, and a risk register with mitigation plans.

Phase 2 — Define the target architecture and strategy

- Choose an incremental strategy: for most organizations, the Strangler Fig pattern offers a safe path to gradually replace functionality without rewiring the whole system at once.

- Decide on architectural layers: consider API gateways, BFFs (backend-for-frontend), microservices where boundaries are clear, and event-driven components for asynchronous processing.

- Define integration contracts: standardize interfaces (APIs, events, data schemas) to reduce surprises during migration.

- Plan data migration approach: determine whether to use dual-write, eventual consistency, or change data capture (CDC) to synchronize systems during transition.

Deliverables for Phase 2: a target reference architecture, a migration roadmap with waves, and governance guidelines for API contracts and data schemas.

Phase 3 — Build the migration backbone

- Establish an abstraction layer: introduce an API gateway and, if needed, a message broker to decouple legacy components from new services.

- Implement the Strangler Fig components: expose new functionality behind new services that gradually replace old ones behind the scenes.

- Adopt a service-first mindset: design small, cohesive services with clear ownership to reduce risk when replacing monolith portions.

- Institute feature flags: control new functionality, enable rapid rollbacks, and run A/B tests without affecting all users.

Deliverables for Phase 3: a functioning middleware layer, initial new services, and an initial set of feature flags ready for controlled trials.

Phase 4 — Run a pilot project

- Select a low-risk, high-value module: choose a module with manageable data migration and limited external dependencies.

- Create a lean MVP of the replacement: implement the new module with the same external interfaces as the old one, so switching is seamless.

- Validate end-to-end flows: test all touchpoints—data ingress/egress, third-party integrations, and user interfaces.

- Measure and iterate: compare performance, reliability, and user experience against baselines; adjust backlog accordingly.

Deliverables for Phase 4: a proven, low-risk replacement path and concrete learnings to apply to subsequent waves.

Phase 5 — Migrate in waves with safe cutovers

- Plan wave-by-wave migration: define clear dependencies, data migration steps, and go/no-go criteria for each wave.

- Use blue-green or canary deployments for cuts: gradually route traffic from the legacy path to the new path, monitoring for anomalies.

- Dual-maintenance windows: maintain backward compatibility during transition; avoid breaking changes in both old and new paths.

- Establish rollback plans: define precise rollback procedures and automatic fallbacks if a wave underperforms.

Deliverables for Phase 5: a staged migration schedule, controlled cutovers, and documented rollback procedures.

Phase 6 — Monitor, optimize, and govern

- Implement end-to-end observability: comprehensive logging, metrics, tracing, and dashboards that cover both legacy and modern paths.

- Enforce continuous testing: contract tests for interfaces, integration tests for data flows, and synthetic monitors for critical user journeys.

- Track KPIs and learn: measure deployment frequency, lead time for changes, MTTR, and downtime; use insights to inform the next wave.

- Refine governance: update architecture principles, contract standards, and security controls as the system evolves.

Deliverables for Phase 6: a mature, observable hybrid environment with a sustainable roadmap for ongoing modernization.

Architectural patterns and techniques that enable zero-downtime modernization

Adopting the right patterns is essential to minimize risk and maximize the speed of delivery while users stay uninterrupted.

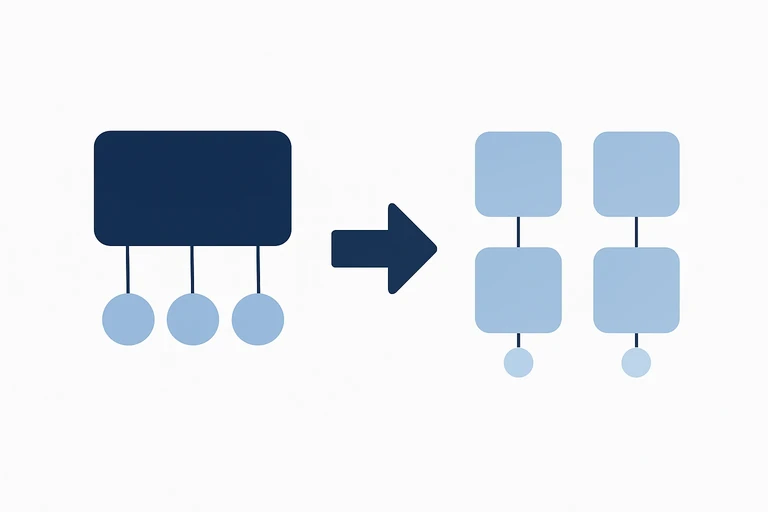

The Strangler Fig pattern

This pattern incrementally replaces parts of a monolith by routing new functionality through new services and gradually strangling the old system as replacements prove stable. It reduces risk, allows early value delivery, and simplifies rollback if issues arise.

API gateway and Backend-for-Frontend (BFF)

An API gateway consolidates access to legacy and new services, providing uniform security, rate limiting, and logging. A BFF layer tailors responses to each client (web, mobile, partner integrations) without forcing all clients through a single, monolithic backend.

Event-driven architecture and CDC

Moving to asynchronous communication decouples producers and consumers, increasing resilience. Change data capture (CDC) helps keep legacy and new data stores synchronized without blocking operations. Event sourcing and messaging enable scalable, auditable flows.

Data migration strategies: dual-write, eventual consistency, and CDC

- Dual-write: the legacy and new systems both accept writes during transition, ensuring data parity but requiring robust conflict resolution.

- Eventual consistency: accept temporary discrepancies with reconciliation processes and clear SLAs for consistency.

- CDC-based replication: capture changes from legacy databases and propagate them to new stores asynchronously.

Zero-downtime deployment methods

- Blue-green deployments: run two identical environments and switch traffic gradually to the new one after verification.

- Canary releases: roll out changes to a small subset of users, monitor, and progressively widen the rollout.

- Feature flags: decouple feature deployments from code releases, enabling quick rollbacks and controlled experimentation.

Containerization and orchestration

Packaging legacy components and new services into containers simplifies deployment and isolation. Kubernetes or other orchestrators provide scaling, health checks, and automated rollsbacks, reducing mean time to recovery during migrations.

Practical considerations: security, governance, and risk management

Modernization is not only about technology; it’s also about people, processes, and protections. Keep these guardrails in place to protect value and keep stakeholders aligned.

- Security by design: enforce least privilege, encryption in transit and at rest, and regular security testing across both legacy and modern layers.

- Compliance continuity: preserve audit trails, data retention policies, and regulatory reporting during transitions.

- Change management: communicate early, train teams, and define clear ownership for each service or module.

- Risk management: maintain a living risk register, with impact, probability, mitigation, and owners for each migration wave.

Measuring success: KPIs that matter during modernization

Track metrics that reflect both technical health and business outcomes. Consider these indicators:

- Downtime during waves vs. baseline

- Deployment frequency and lead time for changes

- Change failure rate and MTTR

- User experience metrics (latency, error rates, satisfaction scores)

- Data consistency indicators across legacy and new stores

- Cost of ownership and time-to-value for new capabilities

Real-world example: a phased modernization scenario

Company A, a mid-sized financial services provider, faced a brittle core legacy system with aging integrations and a monolithic data store. Here’s how a phased approach could unfold:

- Phase 1: Inventory integrations with core payment and customer data; identify a candidate module for replacement – the customer onboarding flow.

- Phase 2: Implement an API gateway and a new onboarding microservice; expose the same external API surface as the legacy path, enabling a clean Strangler Fig path.

- Phase 3: Pilot with a small user group, compare performance and error rates; enable a feature flag to rollback instantly if issues arise.

- Phase 4: Extend the new onboarding path to 25% of users; monitor CTR, drop-off rates, and data parity; gradually route more traffic as confidence grows.

- Phase 5: Complete migration of onboarding while preserving legacy for a defined sunset window; decommission the old module once the new system proves stable.

By following a disciplined wave-based plan, Company A maintained continuous service, achieved early value, and reduced the risk typically associated with big-bang migrations.

Conclusion

Modernizing legacy systems without stopping operations is not only possible—it’s a strategic imperative. The pattern is clear: start small, decouple components, use controlled deployments, and migrate in measurable waves. The payoff is substantial: improved security, faster delivery of new capabilities (including AI-enabled features in the future), better customer experiences, and more predictable risk management.

How Multek can help

Multek specializes in practical software modernization that balances speed, quality, and governance. Our approach combines modern engineering practices, user-centric design, and agile delivery to help you unlock value in weeks rather than years. From architectural guidance and hands-on implementation to robust testing, monitoring, and compliance, we partner with you to chart a safe, incremental path to a future-ready platform.

Ready to start?

If you’re planning a legacy modernization initiative and want a concrete, risk-aware plan that minimizes downtime, reach out to Multek. We’ll collaborate to tailor a phased roadmap, select the right patterns for your domain, and help you realize rapid, sustainable value.